Definition

An algorithm is a well-defined, finite sequence of instructions or a set of rules designed to perform a specific task or solve a particular problem. In essence, it’s the blueprint for a process: it tells a computer—or even a human—exactly how to move from input to output, from question to answer, or from problem to solution.

Algorithms form the very heart of computer science. From sorting data to searching information, encrypting messages to training artificial intelligence, everything digital depends on one or more algorithms functioning correctly and efficiently.

The term “algorithm” itself originates from the name of Persian mathematician Muhammad ibn Musa al-Khwarizmi, whose 9th-century works introduced systematic problem-solving methods to Europe.

Core Characteristics of an Algorithm

For any sequence of instructions to be considered a proper algorithm, it must meet the following five criteria:

- Finiteness – The algorithm must terminate after a finite number of steps.

- Definiteness – Each step must be clearly and unambiguously defined.

- Input – The algorithm must have zero or more inputs.

- Output – It must produce at least one output.

- Effectiveness – Each step must be basic enough to be carried out (in principle) by a person using paper and pencil.

If any of these are missing, you’re not dealing with a true algorithm.

How Algorithms Work

At a conceptual level, an algorithm works like a cooking recipe:

- You start with ingredients (input),

- follow a list of steps (instructions),

- and end up with a dish (output).

In computing, the “ingredients” could be numbers, data records, or characters. The instructions could involve loops, decisions, arithmetic operations, or data transformations. The result could be a sorted list, a calculated value, a decision (true/false), or even a trained AI model.

Example: An algorithm to find the largest number in a list:

- Assume the first number is the largest.

- Compare each number in the list to this assumption.

- If a number is larger, update your assumption.

- After checking all numbers, the current assumption is the largest number.

Types of Algorithms

Different tasks require different algorithmic approaches. Here are some of the most common types:

🔹 Search Algorithms

Used to find data within a structure.

- Linear Search

- Binary Search

🔹 Sort Algorithms

Organize data in a specified order.

- Bubble Sort

- Merge Sort

- Quick Sort

- Heap Sort

🔹 Graph Algorithms

Operate on graph structures.

- Dijkstra’s Algorithm

- A* (A-Star) Algorithm

- Breadth-First Search (BFS)

- Depth-First Search (DFS)

🔹 Dynamic Programming Algorithms

Break down problems into overlapping sub-problems.

- Fibonacci Sequence Calculation

- Knapsack Problem

- Matrix Chain Multiplication

🔹 Greedy Algorithms

Make the best choice at each step.

- Huffman Coding

- Prim’s Algorithm

- Kruskal’s Algorithm

🔹 Backtracking Algorithms

Explore possibilities recursively.

- N-Queens Problem

- Sudoku Solver

- Subset Sum

🔹 Divide and Conquer

Break problems into sub-problems, solve independently, combine results.

- Merge Sort

- Quick Sort

- Binary Search

Complexity: How We Measure Algorithms

📊 Time Complexity

Refers to how the runtime of an algorithm grows with input size.

Common notations:

- O(1) – Constant time

- O(n) – Linear time

- O(log n) – Logarithmic time

- O(n²) – Quadratic time

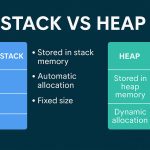

💾 Space Complexity

Refers to the amount of memory used relative to input size.

Good algorithm design balances both time and space efficiency depending on the context.

Real-World Applications

Algorithms are everywhere, often hidden from view:

- Google Search – Ranks results using PageRank and hundreds of proprietary algorithms.

- Netflix – Recommends content using collaborative filtering algorithms.

- Self-Driving Cars – Use pathfinding and sensor-fusion algorithms to navigate.

- Banking Systems – Use cryptographic algorithms for secure transactions.

- Social Media – Algorithms determine what you see in your feed.

- E-commerce – Algorithms match buyers with products and optimize pricing.

Algorithm vs Heuristic

Though often confused, these two are distinct:

| Feature | Algorithm | Heuristic |

|---|---|---|

| Definition | Step-by-step, exact procedure | Rule of thumb or approximation |

| Result | Always accurate and reliable | Often good enough, not guaranteed |

| Example | Merge sort | Nearest neighbor in route planning |

Algorithm Design Paradigms

- Brute Force – Try all possibilities.

- Greedy – Make optimal choice at each step.

- Divide and Conquer – Divide, solve, combine.

- Dynamic Programming – Memorize subproblems.

- Backtracking – Try & undo recursively.

- Randomized – Use random inputs for decision-making.

Choosing the right paradigm is critical for problem efficiency.

Algorithm in Artificial Intelligence

AI relies heavily on algorithmic thinking:

- Machine Learning Algorithms – Decision trees, neural networks, support vector machines.

- Optimization Algorithms – Gradient descent for training models.

- Reinforcement Learning Algorithms – Q-learning, SARSA.

The intelligence of an AI system is largely dependent on how effectively its algorithms model real-world problems.

Example: Binary Search Algorithm (Python)

def binary_search(arr, target):

low, high = 0, len(arr) - 1

while low <= high:

mid = (low + high) // 2

if arr[mid] == target:

return mid

elif arr[mid] < target:

low = mid + 1

else:

high = mid - 1

return -1This algorithm has a time complexity of O(log n) and is much faster than linear search for large datasets.

Challenges in Algorithm Design

- Correctness – Does it solve the problem for all inputs?

- Efficiency – Is it fast and memory-friendly?

- Scalability – Can it handle massive datasets?

- Robustness – Does it handle edge cases and errors?

- Readability – Is it maintainable by others?

A beautifully elegant algorithm is often one that is not only fast but also simple to read and reason about.

Historical Context and Milestones

- Euclid’s Algorithm (c. 300 BC): One of the first documented algorithms, used to compute the greatest common divisor (GCD).

- Al-Khwarizmi (c. 820 AD): His work on systematic approaches to solving linear and quadratic equations influenced the term “algorithm.”

- Alan Turing (1930s): Defined the concept of the Turing machine, laying the theoretical foundation of algorithms and computability.

Algorithms and Ethics

Algorithms are not neutral. Their design and application can introduce:

- Bias – In hiring, policing, credit scoring.

- Opacity – Black-box models may lack transparency.

- Manipulation – Algorithms on social media can manipulate attention and opinions.

Responsible algorithm design involves transparency, fairness, and accountability.

Related Concepts

- Pseudocode

- Flowchart

- Recursion

- Iteration

- Turing Completeness

- NP-Completeness

- Algorithmic Bias

- Computational Complexity

- Randomized Algorithms

- Approximation Algorithms

Conclusion

Algorithms are the invisible engines that drive our digital world. Whether sorting your email, guiding your GPS, or recommending your next movie, algorithms are behind the scenes, tirelessly processing, optimizing, and deciding.

For programmers, understanding algorithms is foundational. But beyond writing code, algorithmic thinking sharpens how you approach problems, how you design solutions, and how you break down complexity into manageable steps.

As technology becomes increasingly integrated into every aspect of life, a deep appreciation of algorithms—and their implications—is not just a technical skill, but a civic one.